By Liz Duffrin, ACN member

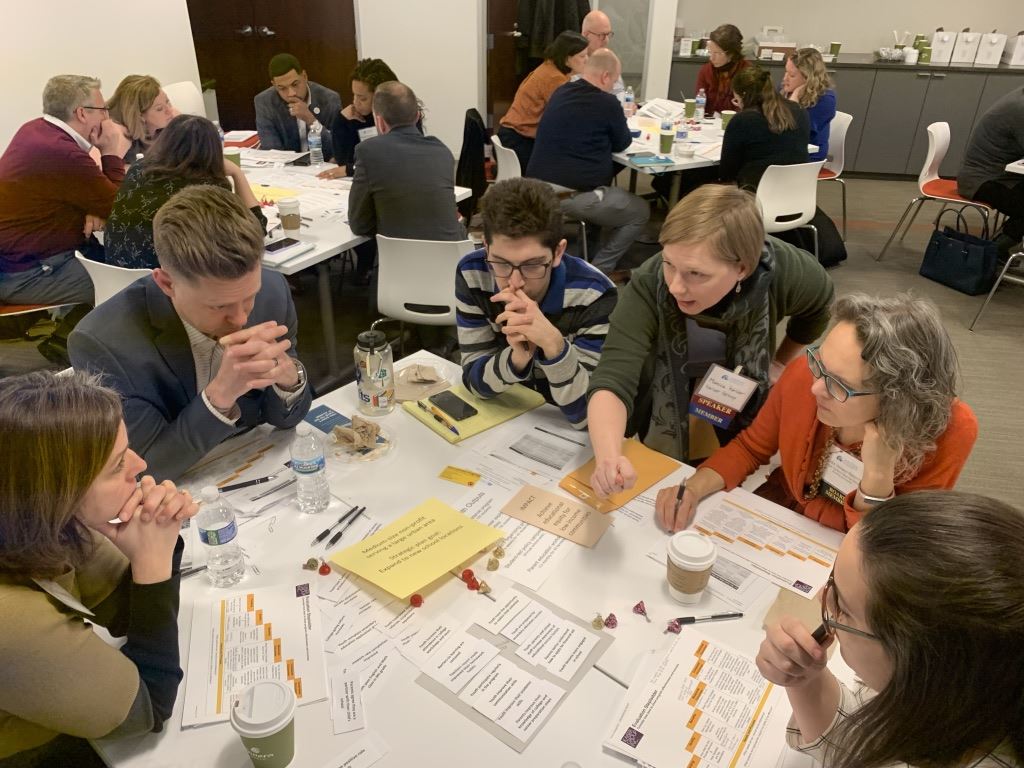

Presenter Monica Kaiser leads a collaborative activity with participants

The only person nonprofits find more intimidating than an evaluator is an auditor, quipped ACN member and evaluation expert Monica Kaiser of Kaiser Group Inc.

But at the ACN quarterly meeting in February, Kaiser made the evaluation process seem not only clearer and less intimidating but even fun. During the morning workshop, she led a packed room of nonprofit professionals and consultants through a series of simulation games to better understand what impact is, how a nonprofit can best demonstrate its impact to funders, and how to determine which type of impact evaluation best serves a nonprofit’s needs.

Here are a few tips from her workshop on making impact evaluation more successful:

1. Create an impact statement.

“So what do we mean when we say impact?” Kaiser asked the group. “The definition we’re going to walk around with today is, ‘the condition we would like our society or our community to be in because of our work.’”

A good impact statement, she explained, is measurable, grounded in research, and ambitious enough that your organization can’t claim having achieved it alone. A health services nonprofit, for instance might aim to “eliminate disparities in incidences of chronic diseases,” she said.

Kaiser also explained what an impact statement is not: It’s not a statistic, such as a percentage increase in a physical fitness score. It’s not a program objective, such as “revise health education curriculum.” It’s also not your nonprofit’s vision, such as “helping all people achieve health across their lifespan.”

“Unlike a vision, impact has to be stated in a way that could potentially be measured,” she explained. “A vision is our hearts on parade. A vision is a beautiful poetic piece of writing. You can find a clue in your vision. But a vision is not an impact statement.”

2. Create a logic model.

Every organization needs a “logic model” or “theory of change” to explain how its day-to-day work will ultimately lead to impact, Kaiser said. These models can vary in format, but they all serve to organize an agency’s thinking about its work, about the data it collects for funders, and about how it communicates its success.

Without a logic model, grant writers are often left to come up with indicators on the fly, she said, and an organization ends up with a laundry list of items to measure “because every grant has a different list based on who wrote it.”

In thinking about a logic model, a simple analogy Kaiser uses is throwing rocks in a pond, which leads to a splash, ripples and ultimately to impact or “The New Pond.”

Throwing rocks into the pond represents a nonprofit’s services. “The majority of agencies measure their outputs and put them on their websites as if they were outcomes,” Kaiser noted. “’We serve 50,000 people’ is not an outcome, but it is a measure of your reach which is absolutely critical to eventually being able to talk about your impact.”

The initial splash is short-term outcomes for participants.

The first ripples are intermediate outcomes that stem from many short-term changes.

Outer ripples are long-term outcomes for participants or changes that participants make in others, such as educators raising student achievement.

The New Pond is the impact that a nonprofit believes will result from its sustained efforts and outcomes.

(Click here to see one logic model template Kaiser uses. The arrows in the template “are the key in a good logic model,” she noted. “They hide the research that says, if you do this, then this happens. You don’t get to invent those connections, you need to make them using research.”)

3. Understand the difference between outcomes and indicators.

One of the trickier parts of creating a logic model is making the distinction between outcomes and indicators, said Kaiser. Indicators are specific measurements, such as the percentage of students who increase their score on the Presidential Physical Fitness Test. Indicators do not belong in a logic model, she insisted. “You are going to have too many and something is going to change and you’re not going to want that indicator anymore, but you’ve publicly committed to it.” One nonprofit that used scores on the Presidential Physical Fitness Test as an outcome, for example, was forced to change its message when that test was replaced by another, she said. Meanwhile, its real outcome, “improving children’s physical fitness” hadn’t changed.During the ACN workshop, participants worked in small groups to begin creating a logic model by writing an impact statement based on a sample vision along with program activities that would lead to the desired impact. Next, each got a baggie with outcomes and indicators on slips of paper and tried to accurately sort them.

Kaiser said that the process of creating a logic model is even more important than having one. “I can walk into an agency, meet with them for two days, and hand them a logic model. That brings no value to that organization whatsoever,” she said. “When that happens, it’s literally just a piece of paper and nobody is going to look at it again.”

The process of creating a logic model, on the other hand, builds buy-in from participants and a shared understanding of what the agency aims to measurably achieve, she said. “The value is in the conversations.”

(For a list of Kaiser’s suggested evaluation terms, including more detail on outcomes and indicators,

click here.)

4. Choose an evaluation method that best meets your needs.

A logic model lays the groundwork for an efficient data collection and evaluation plan. Once the logic model is complete, she said, an evaluation expert inside or outside the agency should assist in coming up with indicators and a plan for measuring the desired outcomes.

Kaiser thinks of evaluation as a stepladder, with agencies climbing further up the ladder to more complex evaluation methods depending on their needs and resources.

The bottom rungs of the ladder include tracking the number of people an agency serves and evaluating their satisfaction with those services. These measurements “are critical to being successful in evaluation,” she said. “If you don’t do this well, you can’t climb the ladder.”

Further up the ladder is measuring benefits to clients. These can be measured qualitatively, based on interviews and surveys. At a higher rung, they can be measured based on standardized, validated instruments, she said. “This is a very solid step to be on, and most agencies really only need to be here.”

At the top of the ladder are more expensive and time-consuming options. These include comparing outcomes between clients and similar unserved groups. At the very top of the ladder is an evaluation where participants are randomly assigned to receive services or not—the “gold standard” of evaluation.

At the end of the workshop, groups of participants pretended to be nonprofits of varying sizes and were each assigned a strategic plan goal and asked to choose the best evaluation method based on their needs and resources.

After the workshop, Kaiser mentioned that she covered as much ground in 90 minutes as she usually does in two four-hour sessions. But ACN participants were enthusiastic and unfazed. “The most common comment I heard at the end,” she said, was ‘My brain hurts, but in a good way.’”